Influence of Information Technology on the Financial Management of Public Sector Institutions. As we all know, information technology has existed integrated into the financial management of institutions. Especially the application of computerized accounting in institutions. Which has improved the level of financial management and work efficiency of institutions. And adapted to the requirements of the reform of the financial system of modern institutions. Therefore, strengthening the analysis of the impact of information technology on the financial management of public institutions is an important part of improving the level of financial management informatization in institutions.

Here are the articles to explain, the Overview of Financial Management of Public Sector Institutions with Influence of Information Technology!

The financial management of public sector institutions exists generated based on the financial activities. And financial relations exist in the process of performing the functions of public institutions. It is an economic management work for public institutions to organize financial activities and deal with financial relations. content. Analyzing the management of financial capital input and output activities by Chinese institutions has the following characteristics. The content of financial management is more complex, the methods of financial management are diversified, and the requirements for financial workers are higher.

The characteristics of financial management of public sector institutions are closely related to the characteristics of institutions themselves. It mainly has the following characteristics: First, the current funding forms of institutions mainly include full appropriation, differential appropriation, self-payment, and enterprise management. Fund providers do not require the right to benefit from the funds invested; second, there is generally no problem of sale, transfer, redemption, or liquidation of public institutions, and fund providers will not share the residual value of the unit; third, public institutions generally do not directly Create material wealth, not for profit.

The role of information technology in promoting financial management of public institutions

With the continuous development of information technology, the traditional decentralized financial accounting model can no longer meet the requirements of the financial management of the public sector or modern institutions, for this reason, a modern centralized financial management model based on information technology has emerged. It can say that information technology plays an important role in promoting the modern financial centralized management mode, and its performance is as follows:

It is beneficial to improve the efficiency of accounting work.

Due to the application of information technology, the unified management of financial information of institutions can realize. And the accounting data within the unit can upload to the information management platform in a timely and fast manner. Avoiding the separate accounting of different departments in the past and then a unified summary. Limitations, and through information technology, the cost management required by the management of institutions has also existed realized. At the same time, through centralized accounting, the financial management department can keep abreast of the use of funds of public institutions at any time. Thereby realizing dynamic supervision of the financial activities of public institutions.

Conducive to strengthening accounting methods.

Traditional financial accounting mainly relies on manual operation. Even if people use electronic calculators to calculate accounting data, there will inevitably be errors in calculation. After the implementation of information technology, the accounting methods of financial management are more scientific and perfect. For example, many advanced accounting methods exist applied to it. Which realizes the comprehensive management of financial accounting. Thus realizing the accuracy and integrity of accounting data. Also, like to learn about the Impact of Big Data Analysis on CPA Audits.

Conducive to play the accounting supervision function.

Through the construction of financial business informatization, all financial activities of public institutions can incorporate into an effective supervision system. For example, the establishment of the central treasury payment system has strengthened the financial supervision of public institutions’ financial expenditures. The treasury payment institutions can rely on the network platform to achieve financial spending supervision of public institutions has effectively prevented various corrupt behaviors of public institutions. For example, through the fiscal non-tax income information system, the charges of public institutions will be directly credited to the special fiscal non-tax account, avoiding the phenomenon of public institutions embezzling special funds.

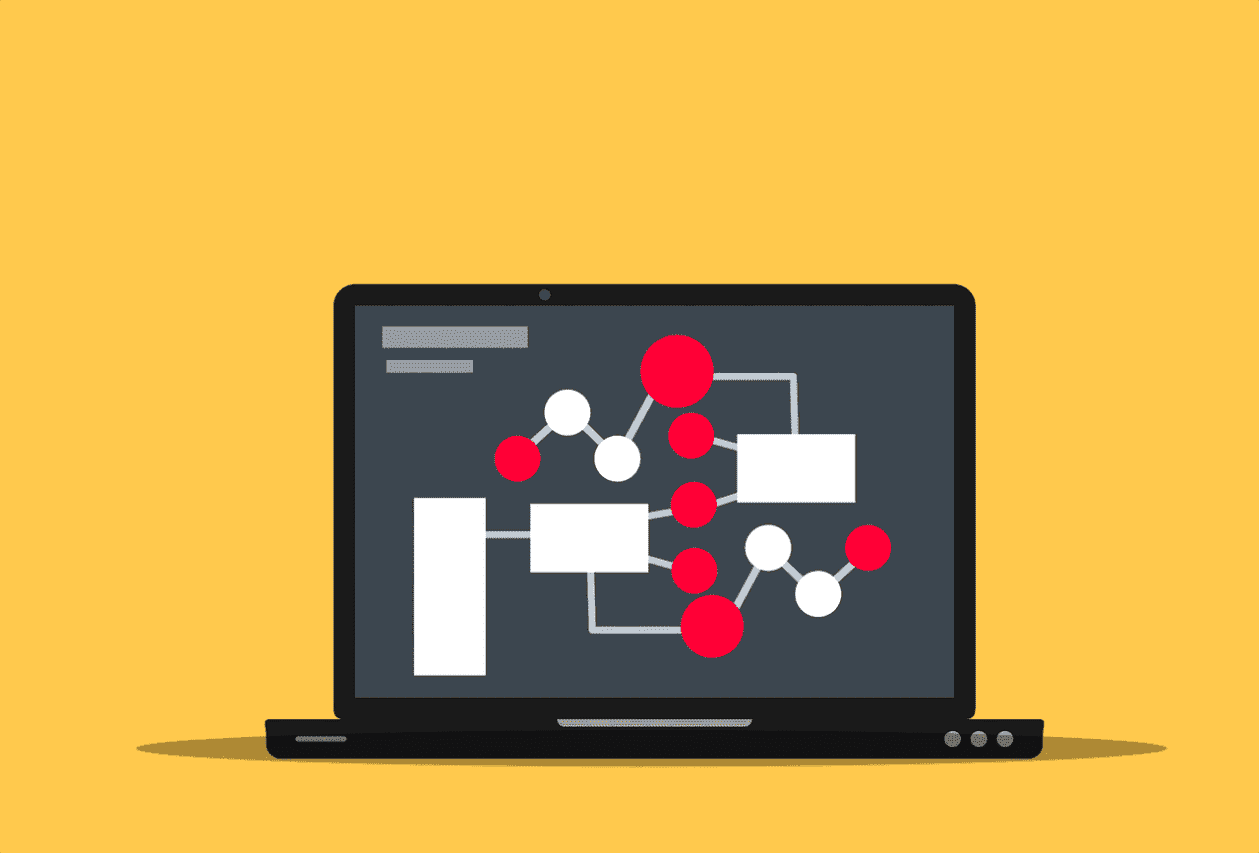

The impact of information technology on the financial management of public institutions

Impact on budget preparation management

Public institutions implement a budget management system. This is what we often call the “zero-based budget” management model. That is, all financial activities of public institutions stand included in the corresponding budget preparation. And all financial management activities of public institutions must prepare by the content of the budget. conduct. Because public institutions have clear public management functions, when compiling financial budgets, institutions need to prepare financial budgets. That can accurately reflect the actual situation of the institution according to the company’s situation. Such as its personnel establishment, asset management, and project development.

Preparing the financial budget for the next year to ensure that all economic activities of the institution in the next year have sufficient financial support. The accuracy of financial budget preparation is based on the understanding and analysis of the information of all economic activities of the institution. If an institution wants to fully grasp the financial information of the unit. It must use modern information technology to realize the realization of the financial information of the unit. Centralized processing and analysis.

Impact on financial decision-making and forecast management

To improve the use efficiency of public financial funds and improve the scientificity and accuracy of financial decision-making, institutions must start from the financial management model and establish a sound financial decision-making and forecasting mechanism. First of all, institutions must use computer automation processing technology, according to mature and scientific mathematical models, combined with the financial data of the unit, to carry out careful analysis and processing, to improve the accuracy of financial information of institutions, and provide financial managers of institutions.

Provide necessary financial information; secondly, institutions should use the financial information management platform to conduct a comprehensive analysis of the relevant financial information, especially the comparison between the unit’s financial budget and the previous year’s financial budget implementation, and use this as the unit’s financial budget preparation. work provides reference.

Impact on financial accounting management

The transformation of the financial management mode of public institutions has changed its financial accounting method from post-event accounting to pre-event and in-event accounting, the original financial accounting object has changed from fixed accounting to dynamic accounting, and the traditional fixed-time financial disclosure mode has been changed. Real-time transmission of financial accounting, indicator execution, and indicator balance. And other information to the financial department greatly enriches the content of financial information. Increases the transparency of financial information of institutions, and improves the use value of financial information.

Impact on fund payment management

Supported by information technology, China has implemented a centralized treasury payment system. Which has changed the “real allocation model” in which the financial department directly allocated financial funds to the bank accounts of public institutions. treasury. The public institution applies when it needs to purchase goods or pay for labor services. After being reviewed by the central treasury payment institution. The funds exist paid directly from the centralized payment account of the commercial bank to the beneficiary. And then the centralized payment account of the commercial bank stands settled with the People’s Bank of China treasury.

The implementation of the central treasury payment system has improved the efficiency of the use of funds in public institutions, reduced the operating costs of financial funds, prevented the embezzlement, misappropriation, and withholding of financial funds, and effectively supervised the financial accounting authority of public institutions.

To sum up, information technology not only affects the financial accounting management mode. And the financial decision-making mechanism of public institutions. But also actively promotes the reform of the financial management systems of public institutions. Therefore, we must vigorously promote the construction of financial management information. And strive to improve the financial management level of institutions.