Characteristics of MIS (Management information systems). It is a set of systems that helps management at different levels to take better decisions by providing the necessary information to managers, for long-term planning. The management information system is not a monolithic entity but a collection of systems that provide the user with a monolithic feel as far as relevant information delivery, transmission and storage are concerned. Also learned, the Role of The MIS, and its Characteristics!

Learn, Explain the Characteristics of Management Information Systems (MIS)!

The different subsystems working in the background have different objectives but work in concert with each other to satisfy the overall requirement of managers for good quality information. Management information systems can install by either procuring off the self-systems or by commissioning a completely customized solution.

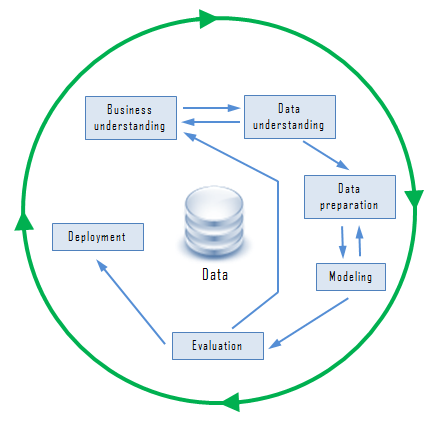

A management information system has the following characteristics:

System approach:

The information system follows a System approach. The system’s approach implies a holistic approach to the study of the system and its performance to achieve the objective for which it has stood formed.

Management-oriented:

For designing MIS top-down approach should follow. The top-down approach suggests that system development starts from the determination of the management needs and overall business objectives. Management-oriented characteristic of MIS also implies that the management actively directs the system development efforts.

Need-based:

MIS design and development should be as per the information needs of managers at different levels that are strategic planning level, management control level, and operational control level.

Exception-based:

MIS should develop with the exception-based reporting principle. This means an abnormal situation, that is the maximum, minimum or expected values vary beyond the limits. In such cases, there should be exceptions reporting to the decision-maker at the required level.

Future-oriented:

Besides exception-based reporting, MIS should also look at the future. In other words, MIS should not merely provide past or historical information. Rather it should provide information based on projections based on which actions may initiate.

Integrated:

Integration is significant because of its ability to produce more meaningful information. For example, to develop an effective production scheduling system, it is necessary to balance such factors as set-up costs, workforce, overtime rates, production capacity, inventory level, capital requirements, and customer services. Integration means taking a comprehensive view of the subsystems that operate within the company.

Common data flows:

Because of the integration concept of MIS, there is an opportunity to avoid duplication and redundancy in data gathering, storage, and dissemination. System designers are aware that a few key source documents account for much of the information flow. For example, customer’s orders are the basis for billing the customer for the goods ordered, setting up accounts receivables, initiating production activity, sales analysis, sales forecasting, etc.

The Following Characteristics of Good Management Information Systems Explained!

For information to be useful to the decision maker, it must have certain characteristics and meet certain criteria.

Some of the characteristics of good information discuss as follows:

Understandable:

Since information is already in a summarized form, it must understand by the receiver so that he will interpret it correctly. He must be able to decode any abbreviations, shorthand notations, or any other acronyms contained in the information.

Relevant:

Information is good only if it is relevant. This means that it should be pertinent and meaningful to the decision maker and should be in his area of responsibility.

Complete:

It should contain all the facts that are necessary for the decision maker to satisfactorily solve the problem at hand using such information. Nothing important should stand left out. Although information cannot always be complete, every reasonable effort should make to obtain it.

Available:

Information may be useless if it is not readily accessible ‘ in the desired form when it needs. Advances in technology have made information more accessible today than ever before.

Reliable:

The information should count on being trustworthy. It should be accurate, consistent with facts, and verifiable. Inadequate or incorrect information generally leads to decisions of poor quality. For example, sales figures that have not stood adjusted for returns and refunds are not reliable.

Concise:

Too much information is a big burden on management and cannot process in time and accurately due to “bounded rationality”. Bounded rationality determines the limits of the thinking process which cannot sort out and process large amounts of information. Accordingly, information should be to the point and just enough – no more, no less.

Timely:

The information must deliver at the right time and in the right place to the right person. Premature information can become obsolete or forgotten by the time it stands needed.

Similarly, some crucial decisions can delay because proper and necessary information is not available in time, resulting in missed opportunities. Accordingly, the time gap between the collection of the central database and the presentation of the proper information to the decision maker must reduce as much as possible.

Cost-effective:

The information is not desirable if the solution is more costly than the problem. The cost of gathering data and processing it into information must weigh against the benefits derived from using such information.